Rahul Garg Hao Du Steven M. Seitz Noah Snavely

The top row shows the first five bases learned from images of Orvieto cathedral downloaded from Flickr. The first basis models the mean image, the second and third bases model shading, while the fourth and fifth bases model the specularities. The bottom row shows the reconstruction of Orvieto cathedral facade using 1,2,3,4 and 5 basis images respectively. The image on the far right is the original image.

Abstract:

Low-rank approximation of image

collections (e.g., via PCA) is a popular tool in many areas of computer

vision. Yet, surprisingly little is known justifying the observation

that images of an object or scene tend to be low dimensional, beyond

the special case of Lambertian scenes. This paper considers the

question of how many basis images are needed to span the space of

images of a scene under realworld lighting and viewing conditions,

allowing for general BRDFs. We establish new theoretical upper bounds

on the number of basis images necessary to represent a wide variety of

scenes under very general conditions, and perform empirical studies to

justify the assumptions. We then demonstrate a number of novel

applications of linear model for scene appearance for Internet

photo collections. These applications include, image reconstruction,

occluder-removal, and expanding field of view.

Paper:

The Dimensionality of Scene Appearance

Rahul Garg, Hao Du, Steven M. Seitz and Noah Snavely

In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Kyoto, 2009 (To Appear)

Supplementary material

Technical Report

Applications:

The Dimensionality of Scene Appearance

Rahul Garg, Hao Du, Steven M. Seitz and Noah Snavely

In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Kyoto, 2009 (To Appear)

Supplementary material

Technical Report

Applications:

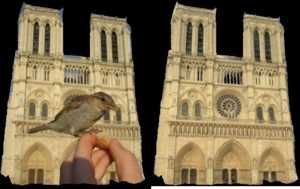

View Expansion |

Occluder Removal |