|

|

Shape and Materials by Example: A Photometric Stereo Approach

| Aaron Hertzmann | Steve Seitz |

| University of Toronto | University of Washington |

|

|

We present a technique for computing the geometry of objects with general reflectance properties from images. This approach has the following features:

Papers:

Aaron Hertzmann, Steven M. Seitz. Example-Based Photometric Stereo: Shape Reconstruction with General, Varying BRDFs.

IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 27, no. 8, pp. 1254-1264, August 2005.

[PDF]

Aaron Hertzmann, Steven M. Seitz. Shape and Materials by Example: A Photometric Stereo Approach. Proc. IEEE CVPR 2003. Madison, WI. June 2003. Vol. 1. pp. 533-540. [PDF] [Input data used in this paper (144MB)] (Earlier conference version)

See also: Adrien Treuille, Aaron Hertzmann, Steven M. Seitz. Example-Based Stereo with General BRDFs. 8th European Conference on Computer Vision (ECCV 2004). Prague, Czech Republic, May 2004. [PDF]

Consider the simple case of two objects photographed together:

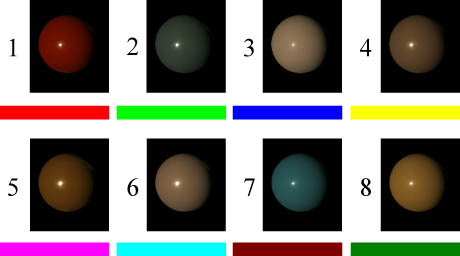

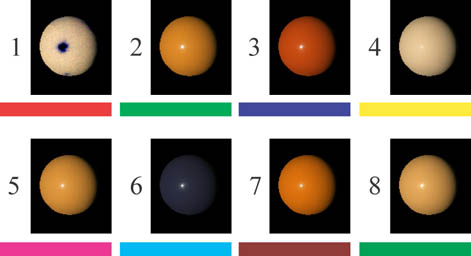

Both objects have the same material (in this case, provided by shiny green spray paint). We would like to determine the shape of the bottle, assuming that we know the shape of the sphere, and assuming that the lighting is distant and the camera is orthographic. Under these conditions, two points with the same surface orientation reflect the same light toward the viewer. We term this property orientation-consistency. For example, if a point is in highlight on the bottle, then it must have the same surface normal as the region in highlight on the sphere. However, the normals of points on the bottle with darker intensities are ambiguous, since there are multiple points on the sphere with the same intensity. We can address this by taking pictures under more lighting conditions:

|

|

|

With these images (we used eight in total), determining the normal of a point on the bottle is simply a matter of finding a point on the sphere with matching intensity under all illumination conditions. Once we recover all surface normals, we can estimate the shape of the bottle:

|

|

|

|

Click on the images above for full-size reconstructions. Eight illumination conditions were used in this experiment. Here is our 3D reconstruction in PLY format, which can be viewed using Scanalyze.

Complex materials

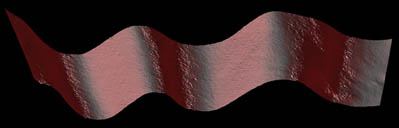

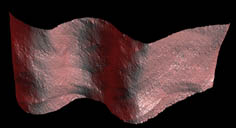

This approach can be directly applied to reconstructing materials that are normally very difficult for computer vision algorithms, including velvet and anisotropic materials.

|

|

|

| Input image of velvet (one of fourteen) | Reconstructed geometry | Reconstructed geometry, texture-mapped |

|

|

|

| Input image of an anisotropic material (one of fourteen) | Reconstructed geometry | Reconstructed geometry, texture-mapped |

To our knowledge, this is the first time in the computer vision literature that a shape reconstruction technique has been successfully applied to an anisotropic material. The reflectance in this example is sufficiently complex that it is very difficult for humans to perceive the shape, yet the algorithm performs quite well.

Multiple materials

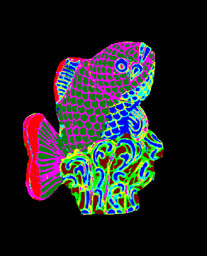

The most interesting case occurs when we do not know the materials or the shape of the target object. For a shiny object, we can photograph the object together with a diffuse and a shiny sphere:

|

|||

|

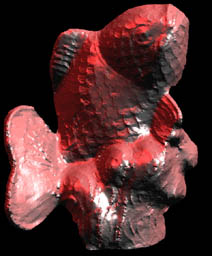

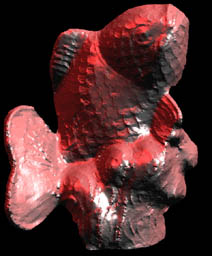

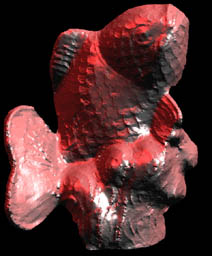

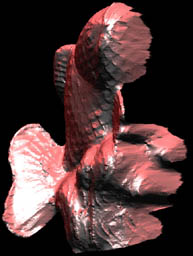

Fourteen images were taken, under varying illumination conditions. We assume that every material on the fish is a linear combination of the materials on the spheres. Under this assumption, it is straightforward to determine the shape of the fish, by solving for the normals and materials that match the pixel intensities of the fish at each point in each image. Here are some views of a 3D reconstructions of this object, shown together with texture-mapped versions:

|

|

|

|

|

|

|

Here is a comparison of our reconstruction, with a laser-scanned version of the same object (using a CyberWare Model 15).

|

Our reconstruction (mesh in PLY format) |

Laser scan (mesh in PLY format) |

In order to laser scan the object, it first had to be covered with a thin diffuse paint. Nonetheless, our method extracts distinctly more surface detail than the laser scanner. While some of this detail is likely due to the higher resolution of the camera compared to the laser scanner, other differences may be due to the fact that the fish is coated with a thin layer of transparent lacquer. Our approach is likely capturing the surface under the lacquer---which does contain a relief texture, while the laser scan of the painted fish captures the smoothed, lacquered, outer surface. (The above PLY format meshes may be viewed using Scanalyze).

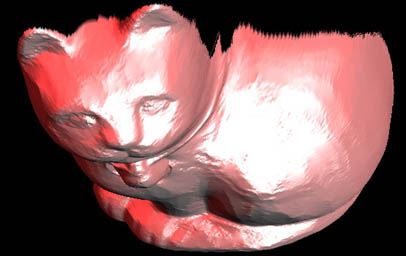

Here is another shiny object we captured:

|

Using thirteen images of these images under varying illuminations, we obtained the following reconstructions:

|

|

Here is our 3D reconstrustruction in PLY format.

Clustering

Most image segmentation techniques operate directly on image pixels, and are unable to distinguish shading variations from material (e.g., albedo) variations. Our algorithm makes it possible to cluster materials on the surface, while avoiding some ambiguities in the surface materials (see the paper for details).

|

|

|

|

Copyright (c) 2003 Aaron Hertzmann and Steven M. Seitz