Expressive Character Animation

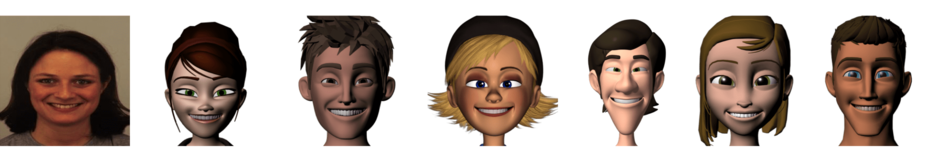

In order to create a successful animated story, the emotional state of a character must be staged so that it is unmistakable and clear. The viewer's perception of its facial expressions is key to successfully staging the emotion. Traditionally animators and automatic expression transfer systems rely on geometric markers and features modeled on human faces to create character expressions, yet these features do not accurately transfer to stylized character faces. Relying on human geometric features alone to generate stylized character expressions leads to expressions that are perceptually confusing or different from the intended expression. Our framework avoids these pitfalls by learning how to transfer human facial expressions to character expressions that are both perceptually consistent and geometrically correct.

Publications

[4] Personalized Face Modeling for Improved Face Reconstruction and Motion Retargeting

[webpage]

Bindita Chaudhuri, Noranart Vesdapunt, Linda Shapiro, Baoyuan Wang

ECCV 2020 (Spotlight)

[3] Joint Face Detection and Facial Motion Retargeting for Multiple Faces

[webpage]

Bindita Chaudhuri, Noranart Vesdapunt, Baoyuan Wang

CVPR 2019

[2] Learning to Generate 3D Stylized Character Expressions from Humans

[poster]

[talk]

Deepali Aneja, Bindita Chaudhuri, Alex Colburn, Gary Faigin, Linda G. Shapiro, Barbara Mones

WACV 2018

[1] Modeling Stylized Character Expressions via Deep Learning

Deepali Aneja, Alex Colburn, Gary Faigin, Linda G. Shapiro, Barbara Mones

ACCV 2016 (Oral)

Posters

[1] Learning Stylized Character Expressions from Humans

Deepali Aneja, Alex Colburn, Gary Faigin, Linda G. Shapiro, Barbara Mones

WiCV, CVPR 2017

Facial Expression Research Group 2D Database (FERG-DB)

FERG-DB is a database of 2D images of stylized characters with annotated facial expressions in seven categories (anger, disgust, fear, joy, neutral, sadness and surprise). The database contains 55767 annotated face images of six stylized characters (Aia, Mery, Bonnie, Ray, Jules and Malcolm). The characters were modeled using the MAYA software and rendered out in 2D to create the images.Download the dataset!

Facial Expression Research Group 3D Database (FERG-3D-DB) New!

FERG-3D-DB is a database of 3D rigs of stylized characters with annotated facial expressions in seven categories (anger, disgust, fear, joy, neutral, sadness and surprise). The database contains 39574 annotated examples of rig parameters and the python code to transfer the parameters to the 3D rigs in MAYA. The parameters are provided for publicly available rigs of four stylized characters (Mery, Bonnie, Ray and Malcolm).Download the dataset!

People

If you have any questions, or just want to say hi, email us!