Fast Depth Densification for Occlusion-Aware Augmented Reality

University of Washington |

|

| |

Abstract

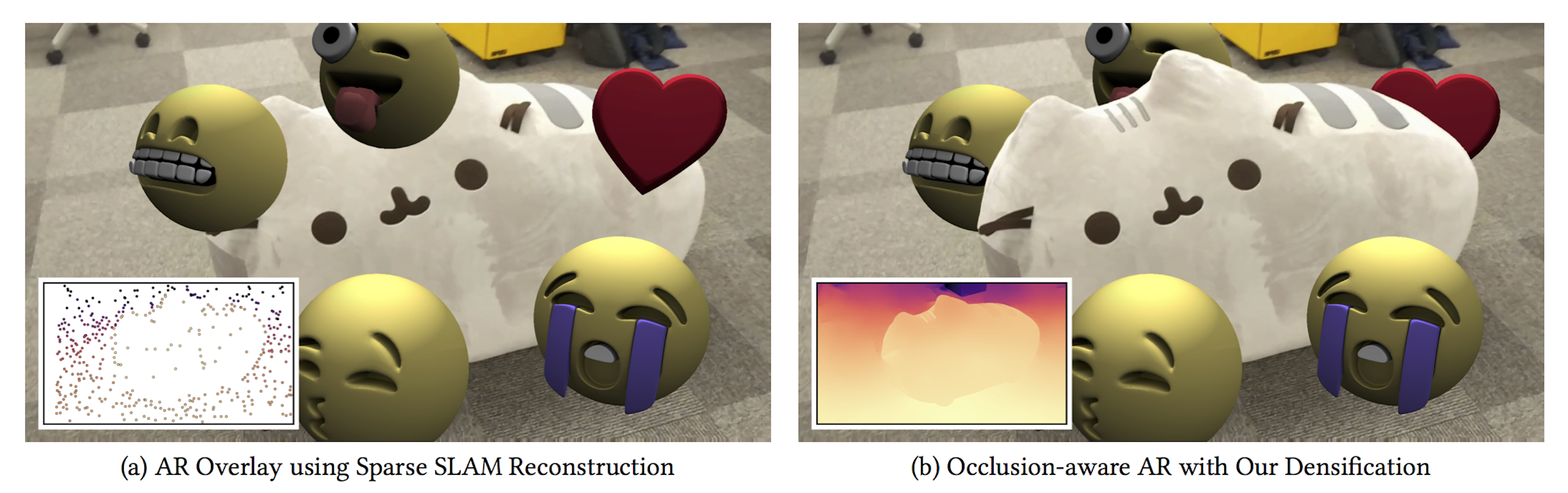

Current AR systems only track sparse geometric features but do not compute depth for all pixels. For this reason, most AR effects are pure overlays that can never be occluded by real objects. We present a novel algorithm that propagates sparse depth to every pixel in near realtime. The produced depth maps are spatio-temporally smooth but exhibit sharp discontinuities at depth edges. This enables AR effects that can fully interact and be occluded by the real scene. Our algorithm uses a video and a sparse SLAM reconstruction as input. It starts by estimating soft depth edges from the gradient of optical flow fields. Because optical flow is unreliable near occlusions, we compute forward and backward flow fields and fuse the resulting depth edges using a novel reliability measure. We then localize the depth edges by thinning and aligning them with image edges. Finally, we optimize the propagated depth smoothly but encourage discontinuities at the recovered depth edges. We present results for numerous real-world examples and demonstrate the effectiveness for several occlusion-aware AR video effects. To quantitatively evaluate our algorithm we characterize the properties that make depth maps desirable for AR applications, and present novel evaluation metrics that capture how well these are satisfied. Our results compare favorably to a set of competitive baseline algorithms in this context.

BibTeX

@article{Occlusion2018,

author = {Aleksander Holynski and Johannes Kopf},

title = {Fast Depth Densification for Occlusion-aware Augmented Reality},

booktitle = {ACM Transactions on Graphics (Proc. SIGGRAPH Asia)},

publisher = {ACM},

volume = {37},

number = {6},

year = {2018}}