Surface Light Field Fusion

3DV 2018Jeong Joon Park - University of Washington

Richard Newcombe - Facebook Reality Labs

Steve Seitz - University of Washington

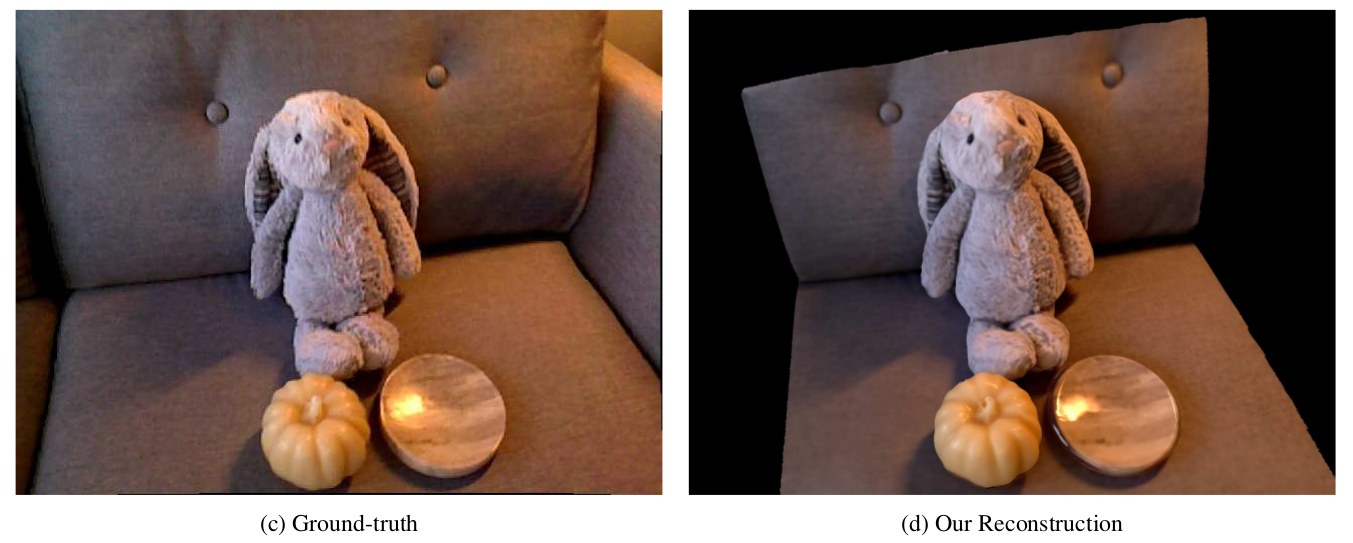

Abstract -- We present an approach for interactively scanning highly reflective objects with a commodity RGBD sensor. In addition to shape, our approach models the surface light field, encoding scene appearance from all directions. By factoring the surface light field into view-independent and wavelength-independent components, we arrive at a representation that can be robustly estimated with IR-equipped commodity depth sensors, and achieves high quality results.

Synthetic renderings of highly reflective objects reconstructed with a hand-held commodity RGBD sensor. Note the faithful texture, specular highlights, and global effects such as interreflections (2nd image) and shadows (3rd image).

Paper: PDF (12.0 MB)

Citation: Park, Jeong Joon, Richard Newcombe, and Steve Seitz. "Surface Light Field Fusion." 2018 International Conference on 3D Vision (3DV). IEEE, 2018.

(BibTeX)

Contact Author: Jeong Joon Park, jjpark7[at]cs[dot]washington[dot]edu

Acknowledgements: This work was supported by funding from the National Science Foundation grant IIS-1538618, Intel, Google, and the UW Reality Lab.

Citation: Park, Jeong Joon, Richard Newcombe, and Steve Seitz. "Surface Light Field Fusion." 2018 International Conference on 3D Vision (3DV). IEEE, 2018.

(BibTeX)

Contact Author: Jeong Joon Park, jjpark7[at]cs[dot]washington[dot]edu

Acknowledgements: This work was supported by funding from the National Science Foundation grant IIS-1538618, Intel, Google, and the UW Reality Lab.

-