50 %

"Surprise" |

"Worried" |

50 %

"Sadness" |

Expression generation

We explored several ways of designing facial expressions from a library of expressions. The main idea is to generate facial expressions by combining them linearly. We combine 3D face models using 3D morphing techniques that:

The simplest way to combine facial expressions is to assign a single weight or percentage to each contributing expressions. The following example shows the blend of 50% of "surprise" and 50% of "sadness" yielding a "worried" expression.

50 %

"Surprise" |

"Worried" |

50 %

"Sadness" |

In order to span a broader set of facial expressions, we combine faces in a localized way. We split the faces in several regions that behave in a coherent fashion and assign weights independently to each region. This next exemple shows the design of a "fake smile" by combining the top part of a "neutral" expression with the bottom part of a "joy" expression.

|

|

|

|

"Fake smile"

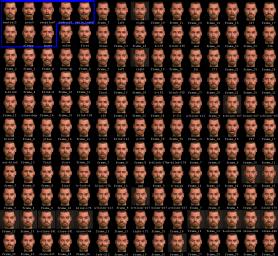

The following faces where generated from eight initial facial expressions

(top left).

The following faces where generated from eight initial facial expressions

(top left).