Modeling

Modeling

Model fitting

We model faces by fitting a 3D generic face model to a set of images.

We start with a set of 4 or 5 images, all taken simultaneously from

different viewpoints.

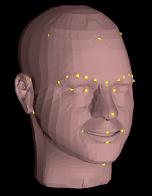

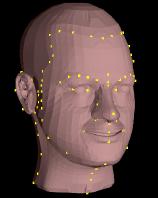

The mesh is fitted to the photograph by selecting feature points

on the mesh and specifying where these points should project on the photos.

From these annotations we recover the 3d location of the feature points

and the camera parameters corresponding to the photographs. To deform the

geometry we use a sparse data interpolation technique that deforms the mesh

based on the recovered position of the feature points.

Using these techniques, the mesh can be iteratively adjusted until

we are satisfied with its shape.

To facilitate the annotation process, we use a set

of polylines on the 3D model that corresponds to easily identifiable

facial features. These features can be quickly annotated by outlining

them on the photographs.

Texture extraction

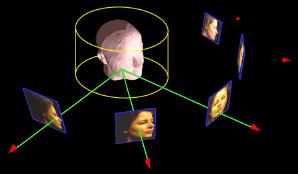

To dress up our 3d face model we extract a texture map from the photos.

The texture is obtained by projecting each of the photographs onto a virtual

cylinder. Using the recovered camera parameters we are able to trace

rays from each point on the face surface back to the image planes. The

different image samples are then combined in a weighted sum to produce the

final texture.

Renderings

(click on pics to get a high-resolution version)