Learning Behavior Styles with Inverse Reinforcement Learning

Abstract

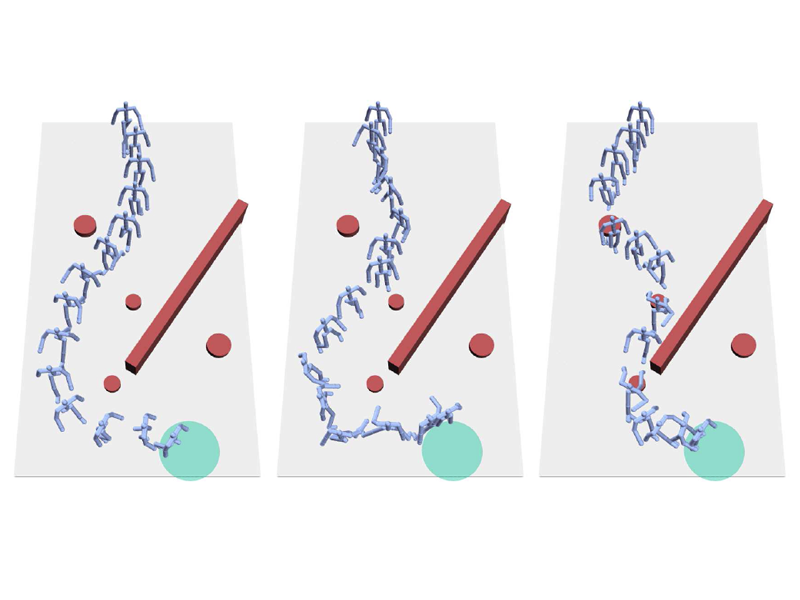

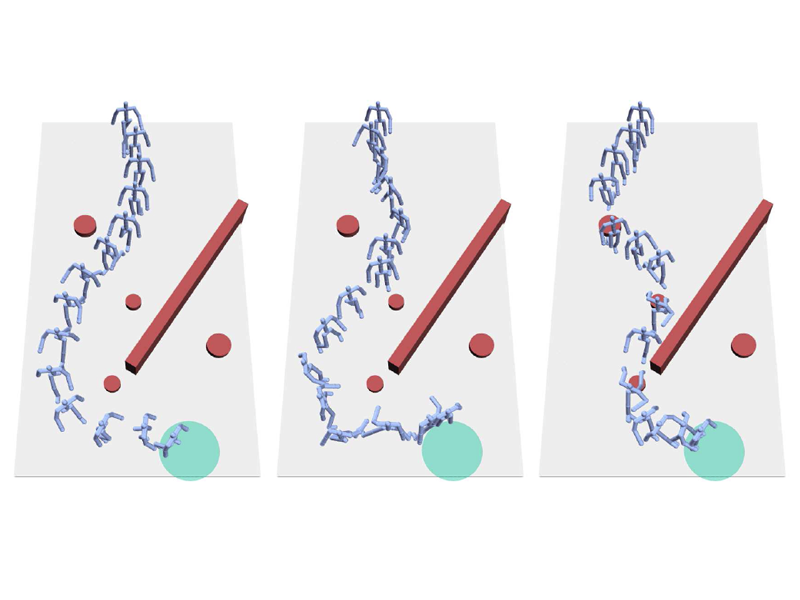

We present a method for inferring the behavior styles of character controllers from a small set of examples. We show that a rich set of behavior variations can be captured by determining the appropriate reward function in the reinforcement learning framework, and show that the discovered reward function can be applied to different environments and scenarios. We also introduce a new algorithm to recover the unknown reward function that improves over the original apprenticeship learning algorithm. We show that the reward function representing a behavior style can be applied to a variety of different tasks, while still preserving the key features of the style present in the given examples. We describe an adaptive process where an author can, with just a few additional examples, refine the behavior so that it has better generalization properties.

Project Members

Resources

|

Learning Behavior Styles with Inverse Reinforcement Learning

Lee, s. Popović, Z.

ACM Transactions on Graphics 29(3) ( SIGGRAPH 2010)

[ Paper (2.4 Mb)]

|

| |

|

|

SIGGRAPH video (5 mins) - (Requires Quicktime to view.)

[ Movie (34.7 Mb)]

|

Support