Momentum-based Parameterization of Dynamic Character Motion

This paper presents a system for rapid editing of highly dynamic motion capture data. The heart of this system is an optimization algorithm that can transform the captured motion so that it satisfies high-level user constraints while enforcing that the linear and angular momentum of the motion remain physically plausible. Unlike most previous approaches to motion editing, our algorithm does not require pose specification or model reduction, and the user only need specify high-level changes to the input motion. To preserve the similar dynamic behavior of the input motion, we introduce a spline-based parameterization that matches the linear and angular momentum pattern of the motion capture data. Because our algorithm enables rapid convergence by presenting a good initial state of the optimization, the user can efficiently generate a large family of realistic motions from a single input motion. The algorithm can then populate the dynamic space of motions by simple interpolation, effectively parameterizing the space of realistic motions. We show how this framework can be used to produce an effective interface for rapid creation of dynamic animations, as well as to drive the dynamic motion of a character in real-time.

Papers

Abe, Y., Liu, C. K., and Popović, Z. Momentum-based Parameterization of Dynamic Character Motion ( ACM SIGGRAPH / Eurographics Symposium on Computer Animation 2004 )

Abe, Y., Liu, C. K., and Popović, Z. Momentum-based Parameterization of Dynamic Character Motion ( Graphical Models, Article in Press )

Video

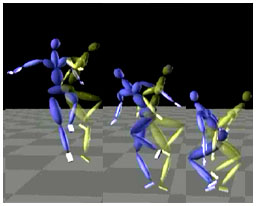

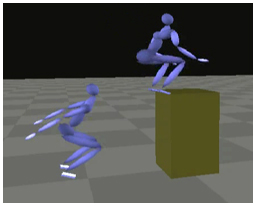

Rapid editing of two consecutive broad jumps from a motion capture sequence ( mp4 , 10M, with audio)

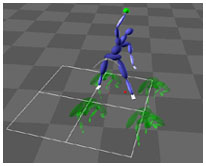

A family of volley ball motions that parameterizes the space of landing position ( mp4 , 6.9M, with audio)

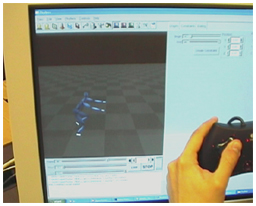

Interactive control of a realistic motion ( mov , 19.8M, with audio)

Physically non-realistic motion ( mp4 , 1.6M)

Project members

This research is supported by

University of Washington Animation Labs

National Science Foundation

Information Technology Research

Alfred P. Sloan Fellowship

Electronic Arts

Sony

Microsoft Research